Security

Introduction

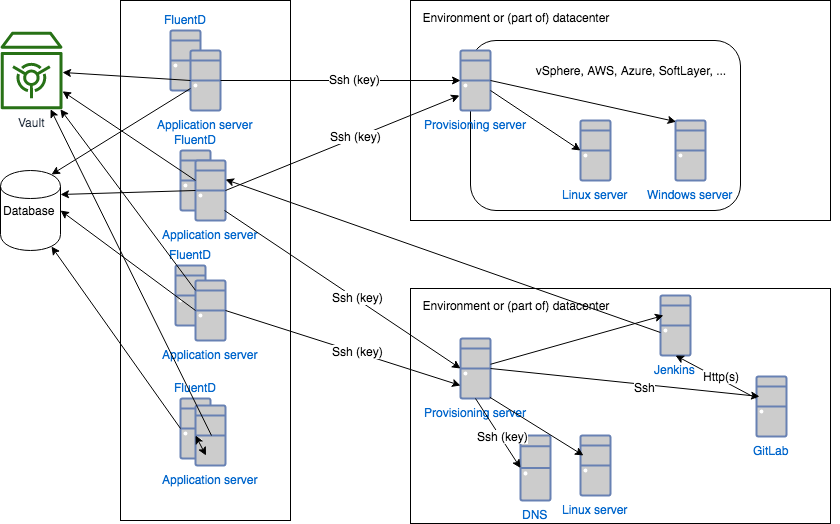

Infraxys provides several layers of security.

Security layers

SSL

Infraxys can consist of several application- and provisioning servers. Connections between application servers and provisioning servers are always done over SSH and this is secured by RSA-keys.

Provisioning servers should never connect to the application servers over SSH.

Management of the RSA keys is done outside of Infraxys, although another Infraxys installation could do this part.

Directory /opt/infraxys/keys/.ssh should contain the private key called “id_rsa”. This key is used to connect to provisioning servers. The public part of this key should be added to every provisioning server that needs to be accessible.Topic “Limit logins to application servers” below explains how to restrict logins to application servers to specific teams. This further protects unwanted access to provisioning servers and the actions that they can perform.

Topic "Limit logins to application servers" below explains how to restrict logins to application servers to specific teams. This further protects unwanted access to provisioning servers and the actions that they can perform.

Isolation in Docker containers

Scripts start running in Docker containers on provisioning servers. There’s no access from this container to the host meaning that scripts and configuration can only be read if it’s defined in or shared with the environment the script is running under.

Grants, teams and accounts

Grants, teams and accounts exist on the project-level. They cannot be interchanged between projects, except for the built-in grants.

Modification of these resources can only be done by accounts that are part of the administrators-team of the project.

Grants

Grants are used to check permissions of the logged-in user. They are no more than descriptive labels of the access they grant.

There are built-in grants like ‘container.list’ and ‘packet.modify’ that are used by Infraxys itself.

Custom grants can be defined at project level. These can then be used to protect individual containers from modification and such.

All grants of the current account can be used in scripts and modules. Users can be granted rights to modify infrastructure in development, but not in production this way. A detailed walkthrough on how to use this will be provided soon.

Examples are production.read, development.modify, …

Teams

Teams are groups of grants. They are assigned to individual users.

Containers, environments and packets have dropdowns containing the teams of the current- and parent-projects. Selecting a team here ensures that only accounts that are a member of that team can perform the corresponding task.

Both containers and environments have profile dropdowns to protect action executions, updates and copy/cloning. If the environment has one of these specified, then the selected profile is required for all containers that are part of the environment. This can be overridden by selecting another profile in a container.

Accounts

User accounts are created in Auth0 for authentication. In Infraxys, the same account is created under a project.

The same counts for technical accounts, although they should be created in Auth0 as an application. Technical accounts are typically used to execute actions through the REST API.

Roles

Roles are normally created in projects where no code is run. They are then used on containers for actual environments.

The attributes on a role cannot be modified, but actions can be executed on the containers where they are used making them suitable to enforce certain conventions and behaviour.

Limit logins to application servers

Application servers can be configured so that only members of certain teams can login to the application server.

This is useful if the application server has SSH-access to provisioning servers where only specific people or teams should be able to execute actions from.

Container sharing

Containers can be shared with other projects and environments. No actions are created in the environments where the container is shared, but all attributes of the container are available through Velocity. Packet files that are at environment level, will be available like this in the target environments.

Git branches

Modules are Git-repositories for which branches can be protected.

These module branches are installed on one or more provisioning server by an administrator with enough rights on those provisioning servers. If separate provisioning servers exist for production and non-production, then the master- or prod-branch is typically installed on the production-instance and a development-branch and feature-branches are deployed on the non-production instances.

If the same provisioning servers are used for several environments, then the branch can be enforced through tags on the environment-resource in Infraxys.

Making sure certain modules are (always) enabled

When an action is executed, the module it belongs to with all modules it depends on are enabled.

Additional modules can be enabled through attributes “enable_module_in_parents” and “always_enable_module”.

Sharing a container with an instance that has “always_enable_module” set to “1” and specifying that the container cannot be overridden will make sure that the module will be available in any environment under the project tree where the container is shared.

See “How modules are enabled” under Modules for more details.

Currently executing user

Directory $INFRAXYS_ROOT/system is readonly. Besides scripts “has_grant” and “has_team”, it contains a file called “current_user.json” and a subdirectory with the environment tags.

Example current_user.json:

{

"username": "user@example.com",

"fullName": "Example User",

"accountTeams": [

{

"name": "Administrators",

"guid": "GUID-123",

"projectGuid": "GUID-1"

},

{

"name": "Infraxys developers",

"guid": "GUID-7449",

"projectGuid": "GUID-652"

}

],

"customGrants": [

{

"name": "delete-environment",

"guid": "GUID-1333"

}

],

"projects": [

{

"name": "Infraxys",

"guid": "GUID-1",

"projects": [

{

"name": "Core",

"guid": "GUID-614"

},

{

"name": "Personal",

"guid": "GUID-652",

"projects": [

{

"name": "Infraxys setup",

"guid": "GUID-635"

}

]

}

]

}

]

}